12.0 Overview

Reinforcement Learning is a form of machine learning that has had primary significant success in game-playing and self-driving vehicles including cars (e.g. Kolter et al, 2010) and helicopters (Coates et al, 2008). Beyond those primary applications, reported application include traffic signal control (Wang et al, 2020), designing chips (Roy et al, 2022), optimizing chemical reactions (Zhou et al, 2017), bidding on advertisements (Jin et al, 2018), recommending news articles (Zheng et al, 2018), optimizing foreign exchange order placement and financial trading strategies (e.g. Huang, 2018), improving energy efficiency in Google’s data centers, and controlling commercial cooling systems (Luo et al, 2020). And there have also been reported applications in medecine (e.g. Tang et al, 2016; Yauney and Shah, 2018). For example, two MIT researchers (Yauney and Shah, 2018) used reinforcement learning to determine how to dose glastoblioma patients with the least amount of toxic anti-cancer drugs that would still be effective.

In 1969, Herbert Simon, an early AI researcher, said

“human problem solving, from the most blundering to the most insightful, involves nothing more than varying mixtures of trial and error and selectivity”.

This could have been a description of modern reinforcement learning systems which use trial and error to learn. Reinforcement learning systems learn by interacting with their environment via trial and error.

12.1 Optimal control theory

Before diving into reinforcement learning, it’s important to understand a predecessor technology, optimal control theory. As will be discussed in the chapter on robotics, optimal control theory is used today (end of 2022) to create the controllers for most commercial robots. As reinforcement learning progresses, it will likely supplant optimal control theory and produce robots that can do far more complex tasks than today’s robots. Optimal control theory is also used to create controllers for elevators and other systems that have complex sets of constraints.

Let’s talk about creating elevator controllers which can be done either using control theory (Pepyne et al, 1997) or reinforcement learning (Crites and Barto, 1996). Suppose, for example, that there are two elevators and twelve floors. For this elevator system, there are many different possible goals that one could set for the system including:

- Minimizing average wait time

- Minimizing the maximum wait time

- Minimizing the average total transport time

- Minimizing the number of times anyone has to wait more than one minute

- Minimizing the number of people waiting

- Minimizing the expected number of people whose wait time is greater than the average wait time

- Minimizing power consumption

In control theory, these would be alternative cost functions. In reinforcement learning, they would be termed reward functions. The behavior of the elevator system will be quite different based on the chosen cost/reward function. For example, a policy developed for one cost function might make the decision to stop at floor 7 and take the people waiting down. A policy developed under another cost function might go up to floor 8 to get the people at both floors. In either case, the idea is to choose a goal and create a control policy that optimizes the system for that goal.

A system has a dynamics model if it has both a reward function and a function (or table) that defines the transition probabilities from one state to other states. When a system has a dynamics model, it can use dynamic programming to learn an optimal policy. You can think of dynamic programming as an optimized way of recursively trying all the different possibilities in order to identify the value of state and infer an optimal control policy. There are two types of dynamic programming methods used:

- Value iteration uses a value function that assigns a value to each possible state. The value function computes the sum of the rewards starting from the current state and acting optimally. Rewards occurring farther in the future are assigned less value according to a discount factor than rewards occurring closer in time to the action taken. The optimal policy is then defined as the one that takes the optimal action (based on the value function) for each state.

- Policy iteration starts with a random policy, determines the current and future rewards that result from the policy, improves the policy, and iterates until the optimal policy is found.

Besides dynamic programming, there are other mathematical optimization methods such as the Linear-Quadratic-Gaussian (LQG) algorithm used to find optimal control policies.

In the real world, the large number of possible states and actions can make these mathematical optimization methods too computationally expensive to be a practical means of computing a policy.

12.2 Reinforcement learning

Reinforcement learning has the potential to learn a control policy for environments with large numbers of possible states and actions.

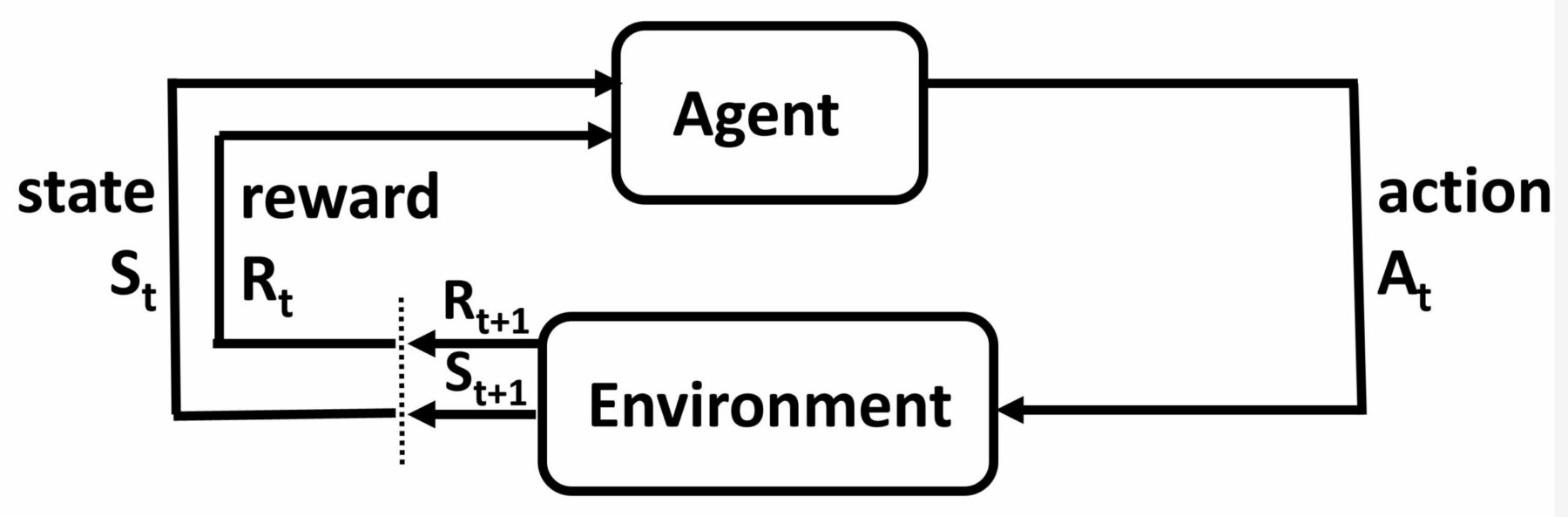

A reinforcement learning system can be considered an agent whose job is to react to the various states of the environment and take actions as illustrated below.

As the agent takes actions in the environment, the environment periodically produces rewards that can cause the agent to modify its behavior policy (i.e. which action it takes in as it encounter the different states).

Reinforcement learning is a class of algorithms for solving Markov Decision Problems (Sutton and Barto, 1998). A Markov Decision Problem, or MDP, consists of a set of states, a set of actions, a reward function that defines when a successful outcome has been achieved, and a set of state transition probabilities (i.e. the probabilities of moving from each state to each other state given a specific action). The goal of reinforcement learning algorithms is to learn a policy that maximizes the cumulative rewards over a period of time.

Let’s take a concrete example. Consider what a reinforcement learning system would need to do to learn the game of Pong…

Pong has a reward function that adds a point to the total score of the winner of the point. The job of the system is to learn a policy that defines what action the actor should take in response to each state of the game.

In response to each state, the actions available to the actor are moving the paddle either up or down. The action space (i.e. the set of all possible actions) is therefore very small – it consists of just two actions.

The state space (i.e. the set of all possible states such as all the possible images in a pong game) is considerably larger but isn’t huge. Many real-world environments have very large numbers of states. For example, the Tetris video game has 1060 states. That is a one plus 60 zeroes. A reinforcement learning system modeling a humanoid robot walking on two legs requires 10100 states (Duan, 2017).

The policy has a value function that assigns a value to each state, or state-action pair. The assigned value is expected return

A simple solution is for the reinforcement learning system to start with a random policy and gradually modify the policy until it leads to winning points. Each time it wins a point, it modifies its policy to increase the value of ALL the state-action pairs that immediately preceded the scored point. When it loses a point, it reduce the value of the state-action pairs.

If it plays long enough, it will start winning games and may even learn to play better than the best human. This is the trial-and-error aspect of reinforcement learning.

However, this simple solution will converge on optimal play slowly for Pong and may not work at all in more complex environments.

More sophisticated systems will try to decide which action choice(s) were responsible. This was termed the credit assignment problem by Marvin Minsky (1961).

One approach is to learn a value function which is the total estimated value of the rewards that will be achieved over time by taking each possible action from each possible state. The policy is then to take the action that maximizes the value function.

In Pong, there is only one possible reward for a sequence of actions – you either win or lose the point. However, in many reinforcement learning environments, a given action from a given state can lead to multiple rewards. For example, in a game like Atari Space Invaders, one is continually scoring points by hitting targets of different values and avoiding getting hit by “bombs”. A single action from a state can lead to hitting multiple targets and/or getting hit by bombs.

In the case of multiple rewards, the credit assignment problem is more difficult. Here, one wants to assign more credit to actions close in time to a reward and less credit to actions farther away in time. This is typically done with a discount factor that determines how much less credit is given to an action that is farther away in time from that reward.

The sequence of states and actions that lead to a specific reward (or lack of reward) is termed a trajectory.

Each reinforcement learning task also has several characteristics including:

Discrete vs. continuous actions. It’s generally easy to characterize the actions in a game as discreet actions (e.g. move the pawn forward one square). In robotic applications, however, action spaces are often continuous (e.g. application of torque). Continuous action spaces represent much more difficult problems for reinforcement learning systems.

Time horizon. Some reinforcement learning tasks are limited in duration or the number of steps. Other reinforcement learning tasks are designed to go on indefinitely and therefore have an infinite horizon.

12.3 Reward functions

One of the most important choices in designing a reinforcement learning system is the choice of the reward function. As discussed in the elevator example above, the reward function will define what the system learns.

Making a poor choice of reward function can result in unintended behaviors. For example, OpenAI researchers built a reinforcement learning system to learn a game named CoastRunners where the goal of the game is to navigate a boat race faster than the other players, and extra points are won by hitting targets along the route. As it turned out, the reward function put too much emphasis on hitting the targets and the reinforcement learning system learned to drive around in a circle hitting the same three targets over and over and never finishing the race.

12.4 Model-based reinforcement learning

When a dynamics model is not available, approximation methods can be used to infer the future value of rewards to improve the policy. Two commonly used methods are temporal difference and Monte Carlo methods. Temporal difference methods analyze the projected returns of the current step compared with the prior step and updates the policy at each step. In contrast, Monte Carlo methods wait until the end of a point or other episode to update the policy. Temporal difference models are said to be bootstrapped because the value estimate for each state or state-action pair is based on estimates of the values and probabilities of successor other states or state-action pairs.

If a dynamics model does not exist, one alternative is to learn a dynamics model (using approximation methods) from experience with the environment. Once a model is learned, one can then use either dynamic programming or approximation methods to learn a policy. If a reinforcement learning system has or learns a dynamics model and uses that model to create a policy, it is said to be model-based.

The other alternative is to use approximation methods directly and not try to learn a model. If it doesn’t have a dynamics model and doesn’t try to learn one, the reinforcement learning system is model-free.

Model-free reinforcement learning systems have had great success in game-playing. These reinforcement learning systems typically require millions of samples to learn to play a game. For game-playing, this often isn’t a problem because samples can be generated by playing the game against itself. Simulation-based environments and game-playing environments can provide high numbers of observations quickly and at relatively low cost for reinforcement learning systems.

In contrast, obtaining observations in robotics and autonomous driving is a slow process, and mistakes are expensive. For example, how often can a robot fall over while learning to walk without breaking? Real-world machines are subject to wear and tear and require periodic maintenance.

As a result, for reinforcement learning applications like robotics where mechanical systems wear out, more sample-efficient model-based reinforcement learning algorithms are typically used. Model-based reinforcement learning systems are often used for robotics skills like locomotion (e.g. robotic walking). Another advantage of model-based reinforcement learning systems is that, because a model of the environment is learned, the model can theoretically be used to generalize to previously unseen instances of the problem domain and for other tasks in the same environment.

In contrast, model-free reinforcement learning methods do not generalize to other tasks even in the same environment (e.g. small changes to a game like Breakout require re-training from scratch).

The chapter on robotics explains how researchers are using model-based reinforcement learning systems to attempt to achieve human-like planning and imagination capabilities.

12.5 Model-free reinforcement learning

12.5.1 Q-Learning

The value iteration method described above for use when a dynamics model is available computes the value of a state but can be computationally difficult. Q-Learning (Watkins, 1989) is a method of approximating the value of taking an action from a given state and does not require a dynamics model. Q-learning is a form of temporal difference learning.

Q-learning uses a Q-function to approximate the value of a state-action pair. The computed value is known as a Q-value. Q-learning starts with a random Q-value for each state-action pair. Then a state-action pair is sampled, and the resulting state and any immediate reward are observed. Next, an expected future sum of rewards is computed by selecting an action for the new state and each following state by choosing the action with the maximum predicted Q-value. Then, a new Q-value is computed by taking the immediate reward plus the expected future sum of rewards. Finally, the predicted Q-value is adjusted to a value that is somewhere between the predicted Q-value and the new Q-value.

A learning rate parameter determines how quickly the Q-value transitions from predicted to new Q-values. This process repeats resulting in more and more accurate predicted Q-values.

Importantly, the Q-learning system does not need to visit every state-action pair. Instead, it can visit only a relatively small number of states and actions and generalize to similar states and actions to cover the whole state-action space.

This generalization process is like the generalization that occurs in supervised learning where the supervised learning system encounters a set of training examples and learns to generalize to unseen examples. Early in the learning process, the system makes random choices until it stumbles onto choices that produce positive rewards and incorporates what it learned into its early policies. However, those early policies are relatively poor policies that need considerable improvement. As a result, it doesn’t make sense for the system to always use these early policies when making action choices.

For a reinforcement learning system to learn, it often needs to take some random actions in order to find the best actions. Reinforcement learning game-playing systems often learn by playing games against themselves. Initially, the reinforcement learning system might take random actions and lose most games. However, with enough randomly-played games, one can imagine the reinforcement learning will randomly score points.

In order to get to the point where it plays a good or great game, a reinforcement learning system can’t simply choose the exact same action for a given state all the time. Instead, it must explore. More specifically, the reinforcement learning system should usually choose the action it thinks is best but not always. In other words, it needs to treat the selection of an action as a probabilistic choice.

On the other hand, as the reinforcement learning algorithm starts to produce better results, it needs to do less and less exploration (i.e. probabilistic choice) and more and more choosing what it believes to be the best action. This tradeoff between exploring via random choices and exploiting the learned policy is known as the exploration/exploitation trade-off. This is very different than supervised learning where there is no notion of an exploration/exploitation trade-off.

A real-world example of this trade-off is the two-armed bandit problem that people encounter in a casino with slot machines that have two arms that can be pulled. If the gambler pulls one arm and receives a reward, should they stick with that arm or keep alternating the arms until they really have enough data to see which works better. (Of course, this will always be an illusory decision in a casino where the actual probabilities of reward are equal).

Once the policy is trained, actions will be chosen greedily (i.e., the action with the highest Q-value will always be chosen). However, during training, to balance exploration and exploitation, actions will sometimes be chosen randomly (for exploration) and sometimes will be chosen based on the Q-values as described above (for exploitation).

One method of encouraging reinforcement learning algorithms to perform more exploration is to use a maximum entropy regularizer or a separate maximum entropy objective. This forces the algorithm to behave as randomly as possible while still learning to maximize rewards effectively (e.g. Harnooja et al, 2018; 2019).

At each step of Q-learning, there is an implicit policy that says take the action with the highest Q-value. However, because the training methodology sometimes follows this policy and other times does not follow the policy and instead chooses randomly, this Q-learning is off-policy learning. In contrast, reinforcement learning algorithms that always choose actions based on the current policy use on-policy learning. Robotics researchers (Ibarz et al, 2021) report finding that off-policy reinforcement learning algorithms generally require about an order of magnitude fewer samples than on-policy methods. The primary reason off-policy methods are more sample efficient is that, because they don’t assume that a sample originates from the current trained policy, samples can be reused.

The SARSA reinforcement learning algorithm, which stands for state-action-reward-state-action, learns a Q-value similarly to Q-learning. The SARSA algorithm also samples a state-action pair and observes the new state and any immediate reward. However, it then chooses the next action for the new state based on a policy derived from the predicted Q-value and is therefore an on-policy temporal difference method.

The main differences between Q-learning and SARSA are safety and the likelihood of finding the optimal policy. SARSA will avoid an action with a large negative reward like walking off a cliff while it is exploring (Sutton and Barto, 2018). However, Q-learning is more likely to find the optimal policy and do it faster if it doesn’t walk off a cliff.

Meta researchers (Ding et al, 2024) developed a technique call Diffusion World Models (DWM) that improves Q-learning by incorporating the diffusion techniques that work so well for image generation. Diffusion systems learn to predict a series of future image states in which the final state is a de-noised image. When applied in a reinforcement learning context, DWM similarly learns to predict a series of future states along with their values and expected rewards. In other words, it effectively learns a model of the environment. This enables Q-learning to be done offline using the predicted future states thereby dramatically reducing the number of real-world interactions required for training.

12.5.2 Policy-based approximation

In policy-based approximation, a policy is learned directly. Policy gradient algorithms are the most common type of policy-based approximation. These algorithms

- Start with a random policy

- Use the policy to generate samples

- Estimate the return of the policy

- Improve the policy using gradient descent

- Go back to step (2)

There are two major advantages to policy-based reinforcement learning: First, it can be computationally advantageous in a very high-dimensional or continuous action space. In Q-learning, one finds the maximum value over all possible actions and, if there are a large number of possible actions (e.g. a million actions), this computation can be very time-consuming but researchers have found ways of reduced the time required (e.g. Gu et al, 2016).

Second, it can be used to learn a stochastic policy. If you have a full dynamics model, then a deterministic policy, in which the same action is always taken for a given state, will often make sense. However, when the state-action space is large or continuous and the dynamics model can only be approximated, a stochastic model often performs better. This is because many states may look the same to the system but require different actions. Also, in some environments such as game-playing, it’s often strategic not to be completely predictable.

There are also two major disadvantages of policy-based reinforcement learning: First, policy-based approximations tend to have a higher variance than value-based approximations. For example, if reinforcement learning were used to learn a game of darts, the system would have a lot of big misses (Juliani, 2018). Second, it can be much slower than value-based learning and can be less efficient because the cost of computing the policy value can be quite high.

Actor-critic reinforcement learning methods were developed to reduce the problem of high variance. In actor-critic reinforcement learning models, both a policy (the actor) and a value function such as a Q-value (the critic) are learned.

The critic doesn’t make any action choices. Instead, the action choices are made by the actor but are informed by the critic. The job of the actor is to improve the policy. The job of the critic is to evaluate the current policy while it’s learning an optimal value function. The reward is only known to the critic.

More specifically, instead of adjusting the policy by computing the expected reward of a policy, the policy is adjusted in the direction of the maximum Q-value. As a result, where policy-based methods might require huge numbers of samples to reduce variance, actor-critic methods reduce variance with much smaller sample sizes.

One problem with actor-critic methods is that they introduce bias which means that they might not find the optimal policy. Trust region policy optimization (TRPO) (Schulman et al, 2015) is an actor-critic reinforcement learning method that reduces the bias by adding a constraint to the cost function that penalizes it for large policy changes during an update. This causes the policy to change more smoothly.

Proximal policy optimization (Schulman et al, 2017) is a reinforcement learning method that is a variant of TRPO but requires far fewer samples.

12.5.3 Game playing

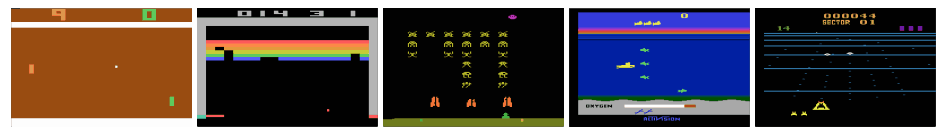

Reinforcement learning for game-playing really came into its own in 2013 when a little company in London named DeepMind used neural network technology to learn Q-functions to play 7 video games (Pong, Breakout, Enduro, Beam Rider, Q*bert, Sequest, and Space Invaders) on an Atari 2600 (Mnih et al, 2013), some of which are shown below:

It learned to play three games (Pong, Breakout, and Enduro) better than a human! Shortly thereafter Google bought the company.

The reinforcement learning system was named Deep Q-Network (DQN) and learned to play 49 Atari 2600 games – 29 of them with human-level performance. Most impressively, it accomplished this using only screen frames (i.e. arrays of pixels) as inputs.

There were no handcrafted features. The DQN approach was to take as input game screens (210 x 160 pixels x 3 RGB values), pass them to a preprocessor that extracted the luminance, and converted the inputs to 84 x 84 x 4), and passed those on as inputs to a convolutional network.

The methodology used is similar to supervised learning in that it optimizes a function using gradient descent over a large set of episodes. The Q function is essentially the sum of squares of the actual rewards minus the reward predicted by the Q function weights.

The DQN system played games against itself and recorded its experience in a replay memory. The replay memory contained experiences where each experience includes the state (a state is a concatenation of four consecutive frames in DQN), action, next state, and reward) and held somewhere around 250,000 experiences with newer experiences replacing older experiences.

The learning process used sampling from this replay memory in mini-batches of 32 experiences rather than using consecutive states. The sampling eliminated the correlation of consecutive states (in a video game, one frame looks very much like the next video frame). For each experience, the actual Q value was compared to the value predicted by the current network, and the difference was used as in the cost function to update the network using gradient descent.

They also periodically copied the weights in the network to a target network. The predicted Q values were computed by the target network, not the real network. This eliminated the problem of the weights changing too quickly. According to DQN author Vladimir Minh: “Changing the value of one action will change the value of other actions and similar states. This network can end up chasing its own tail because of bootstrapping.”

DQN could also learn to sacrifice short-term rewards for long-term rewards. For example, a submarine shooting fish keeps going until it almost runs out of oxygen, then stops shooting fish to surface and get more oxygen because if it runs out of oxygen the game is over.

The DeepMind team has since made a number of improvements to the DQN reinforcement learning method. For example, one of the limitations of the Q-learning algorithm is that it works with expected rewards that take into account the average reward but not the full distribution of rewards. This means that a state-action pair with a high probability of a small reward will be treated the same as one with a low probability of a high reward.

The C51 system (Bellemare et al, 2017) transformed the Q-Learning algorithm from a regression algorithm (predicting the reward) to a classification algorithm that takes into account the whole distribution of possible reward values by dividing it into 51 buckets. C51 more than doubled the performance of DQN on the Atari games.

The DeepMind team has also studied the use of actor-critic reinforcement learning methods for playing Atari. The asynchronous actor critic (A3C) reinforcement learning algorithm (Mnih et al, 2016) combines a Q-Learning network for learning the value function with a policy network for taking actions.

Most importantly, the reinforcement learning algorithm uses multi-threading to execute multiple copies of the algorithm simultaneously. This eliminates the need for a replay buffer and also decorrelates the data to some degree.

The A3C algorithm outperformed DQN and a number of its variants and also significantly reduced training time. Another improvement involves training the system on auxiliary tasks in an unsupervised manner. Q-Learning has been one of the most successful classes of algorithm for reinforcement learning. However, it requires a huge amount of example data which can be a problem especially when rewards are sparse.

A group of Google DeepMind researchers (Jaderberg et al, 2017) attacked this problem by having the reinforcement learning agent learn about the environment via auxiliary prediction and control tasks. The UNREAL (Unsupervised Reinforcement and Auxiliary Learning) agent used the A3C algorithm to learn Atari games. However, the agent was also asked to do three auxiliary tasks using a network that shared some layers with the A3C network. These tasks were:

- Use Q-Learning to learn a policy that maximizes the change in the visual inputs. The visual inputs were divided into 20×20 grids. This defines 400 off-policy reinforcement learning tasks, one task to maximize the change for each cell in the grid. This helps the convolutional network “see” better and improves the network representation of how the recurrent (LSTM) network representations impact the environment.

- Ask the agent to sample random trajectories and predict whether or not a reward would come. This helps the network learn which features impact short-term rewards.

- Sample trajectories from the replay buffer and update the value function more frequently than the policy in order to speed up learning of the value function.

One important point on these auxiliary tasks and the second one, in particular, is that they can be done off-policy so that one can change the distribution of samples. For example, one can use trajectories that more frequently result in rewards than during primary task training. The result was that 10 times fewer samples were required than for a standalone A3C actor-critic reinforcement learning algorithm and performance was 60% higher on several games.

The DeepMind team also built a system, AlphaGo, to play the game of Go that beat the European Go champion five straight games and achieved a 99.8% win rate against other Go programs (Silver et al, 2016). Go is considered even more complex than chess because the number of possible moves in a Go game is 10360 vs 10123 for Chess. To evaluate all possible Go moves with gigahertz processor technology would take longer than our universe will exist. The reinforcement learning system received supervision in the form of thousands of games as input and then refined the system by playing games against itself. It used a combination of value-based network to evaluate board positions and a policy network to select moves.

The same DeepMind team (Silver et al, 2017) then created a generic game-playing algorithm based on the AlphaGo system that learned Chess, Shogi (Japanese chess), and Go. This reinforcement learning system achieved superhuman performance on all three games within 24 hours. Most remarkably, it did so without any supervision other than the rules of the game. In each case, it started playing games against itself and used the game outcomes as supervision.

OpenAI Five (Berner et al, 2019) was the first AI system to defeat human world champions in an esports game. The game, Dota 2, is a difficult game for AI systems because it has long time horizons, imperfect information, and complex, continuous state and action spaces. The OpenAI Five system was trained via self-play on a million frames per second over a ten month period.

Similarly, DeepMind developed AlphaStar, a system that mastered play on the esports real-time strategy game StarCraft II.

Facebook has also been working on games and has recently released an open source framework named Polygames for training AI bots through self-play.

Interestingly, systems that are trained via self-play to learn games like Go that can beat the best human players can themselves be beaten by adversarial networks trained specifically to beat them. A group of researchers (Wang et al, 2022) trained a model to play against the current (circa 2022) state of the art Go system, KataGo (Wu, 2020). Rather than being trained via self-play, the model was trained by playing against a frozen version of KataGo. It learned a very specific strategy that exploited a weakness in KataGo. This strategy enabled it to be KataGo 50% of the time despite the fact that it does poorly against even weak human players.

12.5.4 Advantages of model-free methods

If you have a dynamics model of the environment, you can use the model to evaluate candidate policies.

Model-based reinforcement learning systems offer a number of advantages:

Fewer samples required

Model-free reinforcement learning systems have had great success in game-playing. These systems typically require millions of samples to learn to play a game. For game-playing, this often isn’t a problem because samples can be generated by playing the game against itself.

However, for applications like robotics, where mechanical systems wear out, more sample-efficient model-based reinforcement learning algorithms are typically used. Robotics researchers (Ibarz et al, 2021) report finding that model-based reinforcement learning algorithms generally require about an order of magnitude fewer samples than model-free methods.

Transferability

Because a model of the environment is learned, the system can be used to learn dynamics models and policies for other instances of the problem domain and for other tasks in the same environment. Model-free reinforcement learning methods do not generalize to other tasks even in the same environment.

Supports planning

In order to be used for planning, a reinforcement learning system needs to have a model of the environment that can be used to predict future states and rewards.

To learn a dynamics model by interacting with the environment, the reinforcement learning system needs to experience a large number of transitions. Then a transition function can be estimated using supervised learning that compares the predicted transitions with the actual sampled transitions.

Learning a model is usually more sample efficient (i.e. requires less training data) than model-free reinforcement learning methods. However, the disadvantages of model-based approaches are:

- It can be easier to learn a policy directly than learning a model.

- One can rarely learn a perfect model because it is hard to visit every state during training. The resulting model is usually imperfect and become over-optimistic about certain actions. These errors then compound with additional action choices causing breakdowns. As a result, a model-free approach often performs better for complex tasks.

One of the limitations of model-based reinforcement learning algorithms is that it can be hard to create a single, monolithic policy for a complex task because they often get stuck in local optima and require too many samples.

Guided policy search, which was developed by University of California at Berkeley researchers (Levine et al, 2013), breaks down the problem by training a set of local policies from which an overall policy is derived. Guided policy search uses a policy (actually a set of policies) created from kinesthetic-based training as a starting point. The Berkeley researchers demonstrated the method on robotics tasks such as simulated 3-dimensional humanoid running and the method is heavily used.

12.6 Environments with sparse rewards

One problem with reinforcement learning is that rewards can be sparse, i.e. in many environments rewards occur relatively infrequently especially when exploring using random actions. This can lead to very slow learning because learning only occurs when rewards occur. Nothing is learned from trajectories that lead to no reward.

OpenAI researchers (Andrychowicz et al, 2017) suggested learning from failures to achieve a reward as well as successes. In their hindsight experience replay (HER) algorithm, the goal isn’t to reach just one final state. Instead, the goal is to learn how to get to every state. This way, learning occurs even with failure to reach the final state because every trajectory results in achieving some state.

12.7 Supervised learning vs. reinforcement learning

In supervised learning, the system is presented with examples that are labeled with the correct answer. The goal of the supervised learning system is to generalize from the example seen in the training to unseen examples. It does this by optimizing a cost function which results in the system being able to predict correct answers for examples not in the training set.

In reinforcement learning, the examples are the states of the environment. However, the states are not labeled with correct actions or even with reward values. Instead, the rewards for each state are:

Delayed: The reward is usually delayed past the current state and may not occur until many state-action sequences have occurred.

Sparse: The rewards often occur infrequently.

Noisy: The rewards for a given state sometime occur in the future, sometimes do not occur, and sometimes are negative (e.g. a lost point in Pong).

Reinforcement learning systems use exploration and observation to learn the labels, i.e. the resulting states and rewards for state-action pairs. Reinforcement learning systems learn a function like supervised learning systems that can generalize to previously unseen examples (i.e. unobserved state-action pairs).

The methodology is similar in some ways to supervised learning. For example, learning a Q-function can be modeled with a wide range of algorithms from linear functions to neural networks, and learning occurs via a gradient descent algorithm over a large set of episodes. The Q function is essentially the sum of squares of the actual rewards minus the reward predicted by the Q function weights and functions like a cost function in supervised learning.

One reinforcement leading researcher (Levine, 2022) explained the key differences between supervised and reinforcement learning as follows:

(1) Reinforcement learning makes decisions while supervised learning makes predictions. For example, supervised learning may be used to predict the number of orders a customer will make but how to use that information to, say, improve profits require human decision-making. In contrast, reinforcement learning could theoretically be given a decision-making objective like maximizing profits.

(2) Supervised learning assumes i.i.d. (independent and identically distributed data). Supervised learning only works where the training and real-world distributions are identical. Supervised learning systems are therefore vulnerable to distribution shift where the real-world distributions change. For example, a system trained pre-pandemic to predict worker sick days might not work well during the pandemic.

(3) Supervised learning systems cannot use feedback without re-training the whole system. In contrast, reinforcement learning systems continually incorporate feedback to improve their policies.

(4) Reinforcement learning systems use sequential decision making. Sequential decision making is a natural part of reinforcement learning systems. Feedback on actions taken is critical for reinforcement learning systems. Each action the system takes influences future observations. A system that assumes i.i.d. cannot do this.

It is also easier to tune supervised learnings models than it is to tune reinforcment learning models. The selection of less than optimal parameters in a reinforcement learning setting has much more of a negative impact on the training time of reinforcement learning models. Andrew Ng (2022) suggested that poor hyperparameter choices for reinforcement learning models could cause the system to train 100 times more slowly and perhaps not converge at all where the impact would only likely be 3-10x for supervised learning.

As with supervised learning, when neural networks with more than one hidden layer are used, instead of just reinforcement learning, it is often termed deep reinforcement learning.

12.8 Inverse reinforcement learning

A system that uses reinforcement learning to infer the reward function is known as inverse reinforcement learning (IRL) (Russell, 1998). IRL has been found to be particularly effective in driving, navigation, and robotics applications.

In robotics contexts, IRL is often referred to as inverse optimal control and the goal is to infer a cost function which is essentially the same thing as a reward function. However, the optimal IOC policy will minimize the cost while the optimal IRL policy will maximize the reward.

IRL systems assume that an expert demonstrator has a reward function that can be expressed as a linear combination of features and the goal. In a self-driving context, the features might be characteristics like speed, distance to the car in front, distance from the middle of the lane, and so on or they might be higher-order features such as “motorcycle 4 car lengths behind” (Levine et al, 2010). Each feature has an unknown weight.

The goal of the reinforcement learning system is to learn the weights that make up this linear function. In a robotic context, features might be things like “distance from large objects”. The general form of the algorithm as defined by Ng and Russell (2000) is:

- Randomly choose a set of weights for the reward function which also defines a new policy. The new policy also becomes the first entry in a list of generated policies.

- Estimate the value of all the generated policies (initially there is only one generated policy) by running several trials (e.g. using a driving simulator) and compute the average cumulative reward using the new reward function for the trials.

- Generate a new reward function by finding a set of weights that maximizes the difference between the expert’s policy value and the values of each of the generated policies.

- Add the new policy to the list of generated policies and go back to step 2.

- Stop when the reward function generates a policy value that is close enough to the expert’s policy value.

Another group of researchers (Cristiano et al, 2017) showed that reward functions could be learned from human feedback for tasks that are hard for people to demonstrate. This idea of reinforcement learning from human feedback was used to turn GPT-3 into the wildly popular ChatGPT.

12.9 Offline reinforcement learning

Offline reinforcement learning algorithms use previously collected data to train systems. Reinforcement learning algorithms have historically been primarily online algorithms in which the system interacts with the environment to collect experience and uses that experience to improve its policy (Sutton and Barto, 2018).

However, online interaction is impractical in many environments. In environments such as self-driving cars, it can be dangerous. In robotics, robot failures can lead to expensive damage. In healthcare, people could die. Finally, the number of interactions required to learn a good policy might be so large (as it often is for supervised learning) that online training is impractical.

Another disadvantage of online algorithms is that it is difficult to generate the vast amounts of training data that have proved so valuable in supervised learning. One notable exception is learning to play Atari and other games where self-play enables learning based on vast amounts of experience.

In offline reinforcement learning, data is collected by applying one policy to the encountered states of the environment. That data is then used to train a policy offline (i.e. without interaction with the real-world environment) and, once trained, is put into real-world production use.

Offline reinforcement learning has been used for several years to train robots and self-driving vehicles. For example, most Teslas on the road track the environment state and the computed actions and compare those actions to ones made by the human driver. This information is then sent back to Tesla HQ which provides a massive amount of data that can then be used in simulations. Similarly, robots are often trained in simulators.

One problem with offline methods is that, unlike online methods, they assume i.i.d. As a result, they are vulnerable to distribution shift, just like supervised learning systems. This can make transfer of the learned system to the real world difficult. If the distribution of offline data differs from the real-world distribution or if the real-world data distribution changes, it will cause problems for the learned system. Conservative Q-learning (Kumar et al, 2020) is a technique for mitigating but eliminating this issue.

Offline learning also make exploratory behavior difficult. Exploration can only occur within the confines of the offline data distribution. See Levine et al (2020) for a review of offline learning issues and an overview of approaches to solving those issues.

D4RL is a dataset created by Berkeley and Google Brain researchers (Fu et al, 2020) that is designed to drive progress in offline reinforcement learning.

12.10 Unsupervised reinforcement learning

It is often difficult to collect enough data to adequately train systems with reinforcement learning. The same issue occurs in supervised learning. For supervised learning, the primary approach to overcoming limited supervisory data is to use unsupervised pre-training followed by fine-tuning (see the chapter on transfer learning). Researchers are now starting to apply analogous approaches to reinforcement learning.

For example, Berkeley researchers (Liu and Abbeel, 2021) used contrastive loss pre-training that was similar to the pre-training in SimCLR. Patches of Atari game images were slightly modified using random pixel shifts and color jitter. The system was trained to distinguish the augmented patches from random patches. This was environmental pre-training without the presence of rewards. Then, with a relatively small amount of fine-tuning of the reinforcement learning algorithm using rewards, the system was able to learn to play the games as well as systems trained solely with large amounts of reward data.

A team of Berkeley, FaceBook, and Google researchers created the Decision Transformer (Chen et al, 2021) which uses an autoregressive learning objective to train a model as a sequence learning problem. It outperforms many of the earlier model-free, offline learning systems on tasks like Atari games. A multi-game version (Lee et al, 2022) was able to play a suite of 46 Atari games simultaneously with near-human performance.

In a separate effort, DeepMind researchers (Baker et al, 2022) used unsupervised training in a transformer architecture to learn to play the video game Minecraft.

Berkeley researchers (Janner et al, 2021) created the Trajectory Transformer which also treats reinforcement learning as a sequence modeling problem. The system is trained to be a planning tool that produces a sequence of actions that result in high rewards.

12.11 Data augmentation

Another approach to environment with inadequate reward is to use data augmentation techniques. For example, Kostrikov et al (2021) significantly improved the sample efficiency of the DQN algorithm (see above) on Atari games by augmenting the data with random images shifts.

© aiperspectives.com, 2020. Unauthorized use and/or duplication of this material without express and written permission from this site’s owner is strictly prohibited. Excerpts and links may be used, provided that full and clear credit is given with appropriate and specific direction to the original content.